Grading Platform

Building on our comprehensive analysis of open-ended assessment challenges and opportunities, we have developed an innovative grading platform that directly addresses the key issues identified in our research. Our knowledge base revealed significant gaps in existing solutions, particularly regarding consistency and efficiency. The classification of assessment systems as high-risk applications, combined with our survey findings, guided our development of a human-centric platform that enhances rather than replaces human judgment.

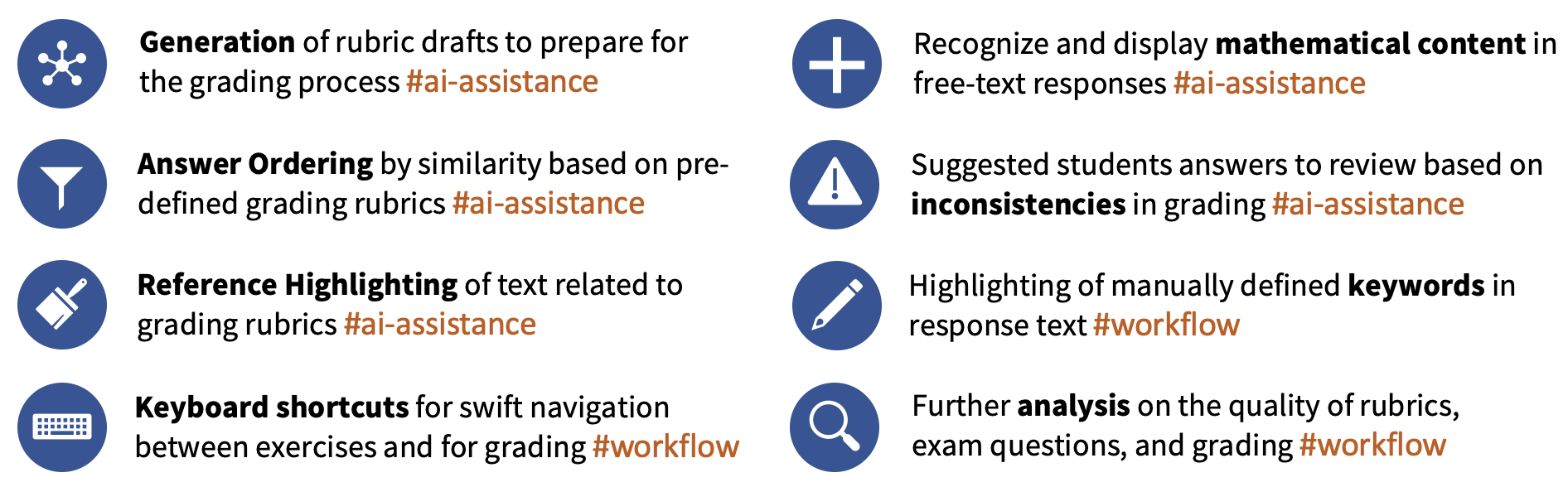

The platform implements a carefully designed approach to AI assistance that aligns with both educational best practices and regulatory requirements. Our surveys revealed that workload (rated 3.95/5) and grader inconsistency (3.50/5) represent the most significant challenges in open-ended assessment. To address these issues while maintaining human oversight, we developed five key AI features and some auxiliary features that enhance the grading process without compromising human judgment.

Rubric Generation

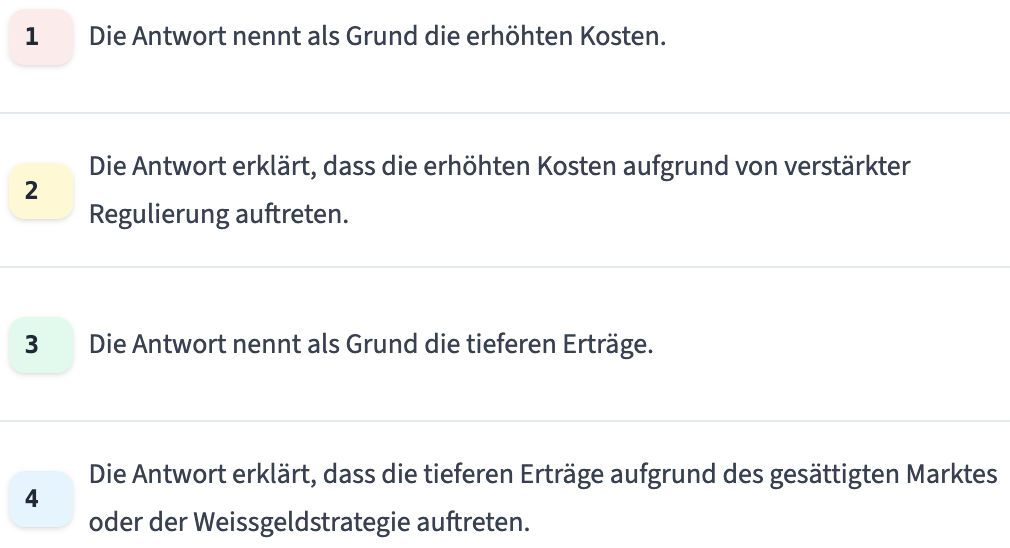

The development of high-quality rubrics is crucial for consistent and fair assessment. Our research indicated that rubric-based grading was rated as the most important feature (4.46/5) by surveyed lecturers, with three out of five interviewed participants already using such predefined grading criteria. A rubric is a structured scoring guide that defines specific criteria and associated point values for assessing student responses in an exam. Rubrics break down complex assessment tasks into measurable components, allowing for consistent evaluation across different graders.

- Example grading rubrics

Our platform offers AI-assisted rubric generation to support lecturers in creating comprehensive assessment criteria. The system generates rubric suggestions based on three key inputs: the question content, example solutions (if available), and/or specific grading instructions. These AI-generated rubrics serve as a starting point that lecturers can refine and adjust according to their needs.

The AI-generated rubrics are designed to be atomic and measurable, facilitating consistent application across different graders and exams. This is particularly important as the rubrics serve as the foundation for most other AI-assisted features in our platform, including content highlighting and answer clustering, while ensuring that the final grading decisions remain firmly in the hands of human graders.

The rubric generation process follows a structured approach that ensures both quality and human oversight. First, the AI analyses the question and example solutions to identify key concepts and potential assessment criteria. It then generates a set of rubrics that cover different aspects of the expected answer, including both content-related criteria and structural elements, as specified by the grading instructions. Each rubric includes a clear description and suggested point allocation. Lecturers review and approve these rubrics before they are used in the grading process, maintaining full control over the assessment criteria.

Rubric Highlighting

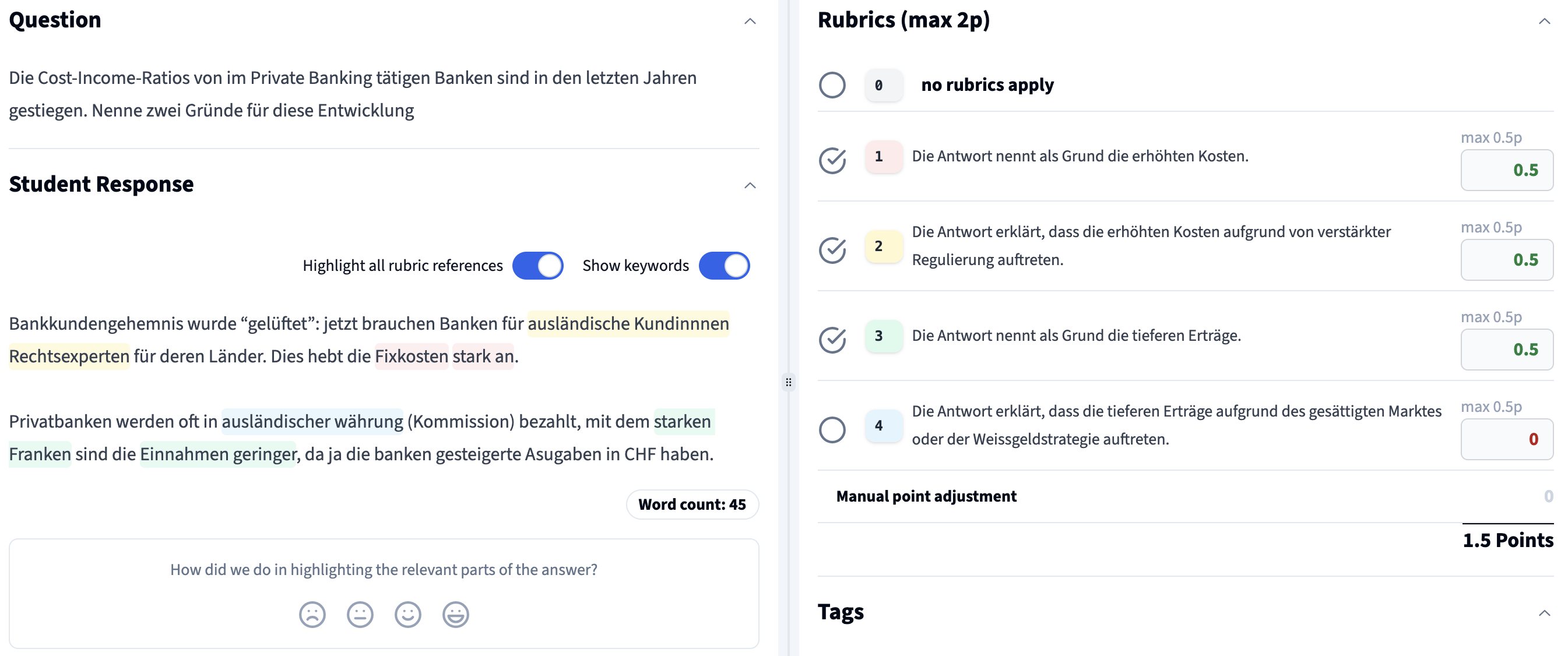

Our interview findings revealed that lecturers explicitly look for keywords when grading text exercises, suggesting the value of automated content identification. Content highlighting is helpful for efficient grading of open-ended responses, particularly when working with comprehensive rubrics across multiple graders.

Our platform implements AI-powered highlighting that visually connects parts of student responses to specific rubrics. The system analyzes each response and highlights text passages in different colors corresponding to the rubrics they might relate to. This visual aid is designed to help graders quickly identify relevant content while maintaining their autonomy in determining whether the highlighted content fulfills the rubric requirements.

- Example of Rubric Highlighting

Instead of proposing scores or indicating fulfillment, the highlighting simply draws attention to potentially relevant content. This design choice directly addresses concerns expressed in our interviews about maintaining human oversight while leveraging AI assistance. The highlighting serves purely as a cognitive aid, helping graders focus their attention while preventing the introduction of AI bias into the process by proposing a grading decision.

The highlighting process utilizes language models to understand both the rubric requirements and student responses in context. For each rubric, the system identifies text passages that semantically relate to the rubric's criteria, regardless of specific keyword matches or whether the text passages are positively or negatively related. This approach helps identify relevant content, even when students use different terminology or express concepts in various ways.

Answer Ordering

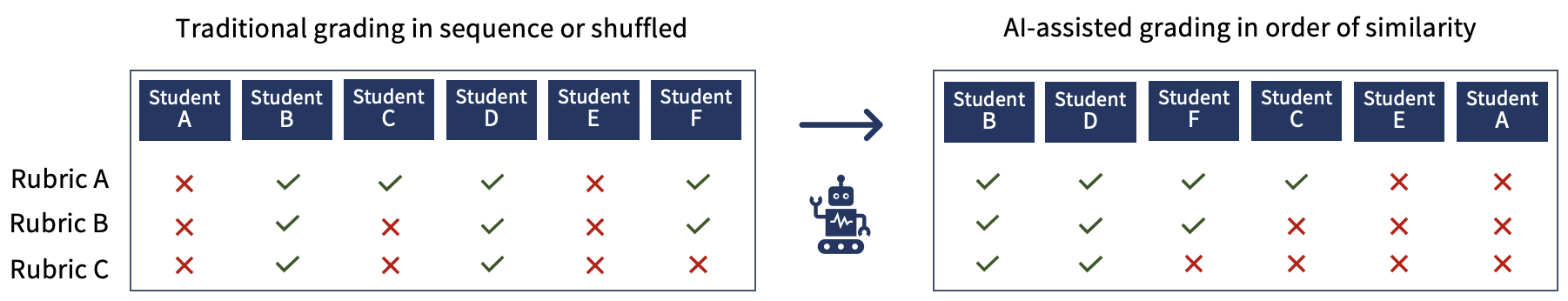

Efficient grading of large exam cohorts requires strategies to reduce cognitive load and potential biases. Our research revealed that all interviewed participants prefer exercise-by-exercise grading over student-by-student approaches, with some explicitly mentioning shuffling as a bias reduction technique.

Our platform implements AI-powered answer ordering that presents student responses in an optimized sequence. Rather than showing answers in random order or using simple shuffling, the system leverages AI-generated grading vectors for each response. These vectors contain numerical scores for each rubric criterion, creating a comprehensive representation of the response content. Importantly, these grading vectors are never shown to human graders to prevent the introduction of AI bias into the assessment process.

- Visualization of Answer Ordering

The ordering is achieved through vector distance calculations between these AI-generated grading vectors. The system arranges responses to minimize the overall distance between consecutive answers, ensuring that responses with similar grading patterns appear together. This approach is designed to reduce cognitive load from constant context switching between different answer types and helps maintain consistent grading across similar responses. This directly addresses challenges like workload and bias as identified in our research.

Math Parsing

Mathematical expressions in student responses often appear in various formats, from simple inline calculations in free-text editors to complex LaTeX formulas. A small test case in Mathematics revealed that inconsistent notation and formatting can significantly increase cognitive load during grading.

Our platform implements automated mathematical expression parsing that converts raw text representations into standardized LaTeX notation. When students write mathematical expressions in plain text format (e.g., "x^2-6" or "sqrt(4)"), the system automatically converts these into properly formatted mathematical notation. The original text remains visible for verification, but the standardized format helps graders quickly under-stand the mathematical structure of the response.

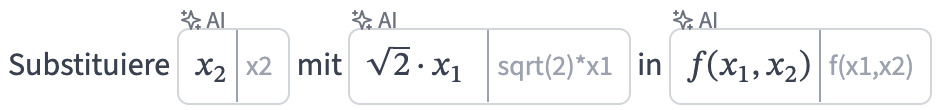

An example of math parsing in action for both a normal and a highlighted part of the student response is shown below:

- Example of Math Parsing

-

- Example of Math Parsing with Highlighting

The parsing functionality operates through specialized AI prompts that identify and interpret mathematical expressions within the broader context of student responses. This is particularly valuable in responses that mix textual explanations with mathematical reasoning, as it helps graders focus on the conceptual understanding rather than decoding various notation styles. By standardizing the presentation of mathematical expressions, the system reduces interpretation errors and improves grading efficiency while maintaining the original response for reference.

Quality Assurance

The quality assurance functionality is a key component of our AI-assisted grading platform, designed to enhance grading consistency. This feature directly addresses concerns raised in our interviews about maintaining fairness and consistency, especially in team-based grading scenarios, which all participants reported using for large exams.

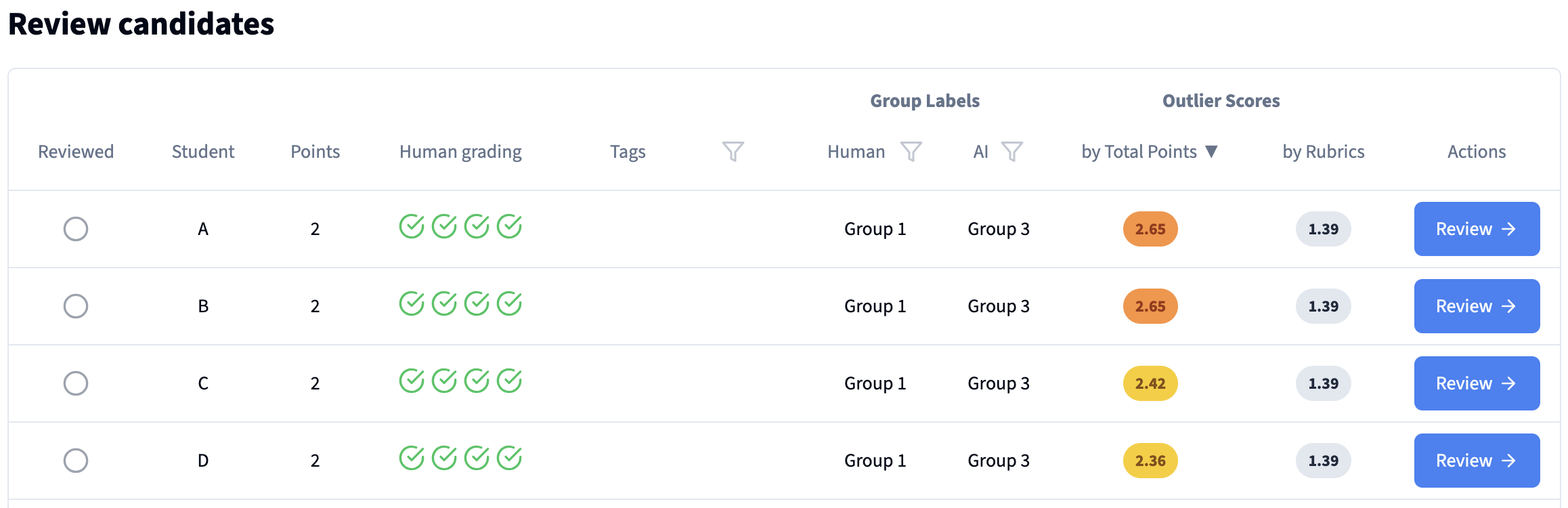

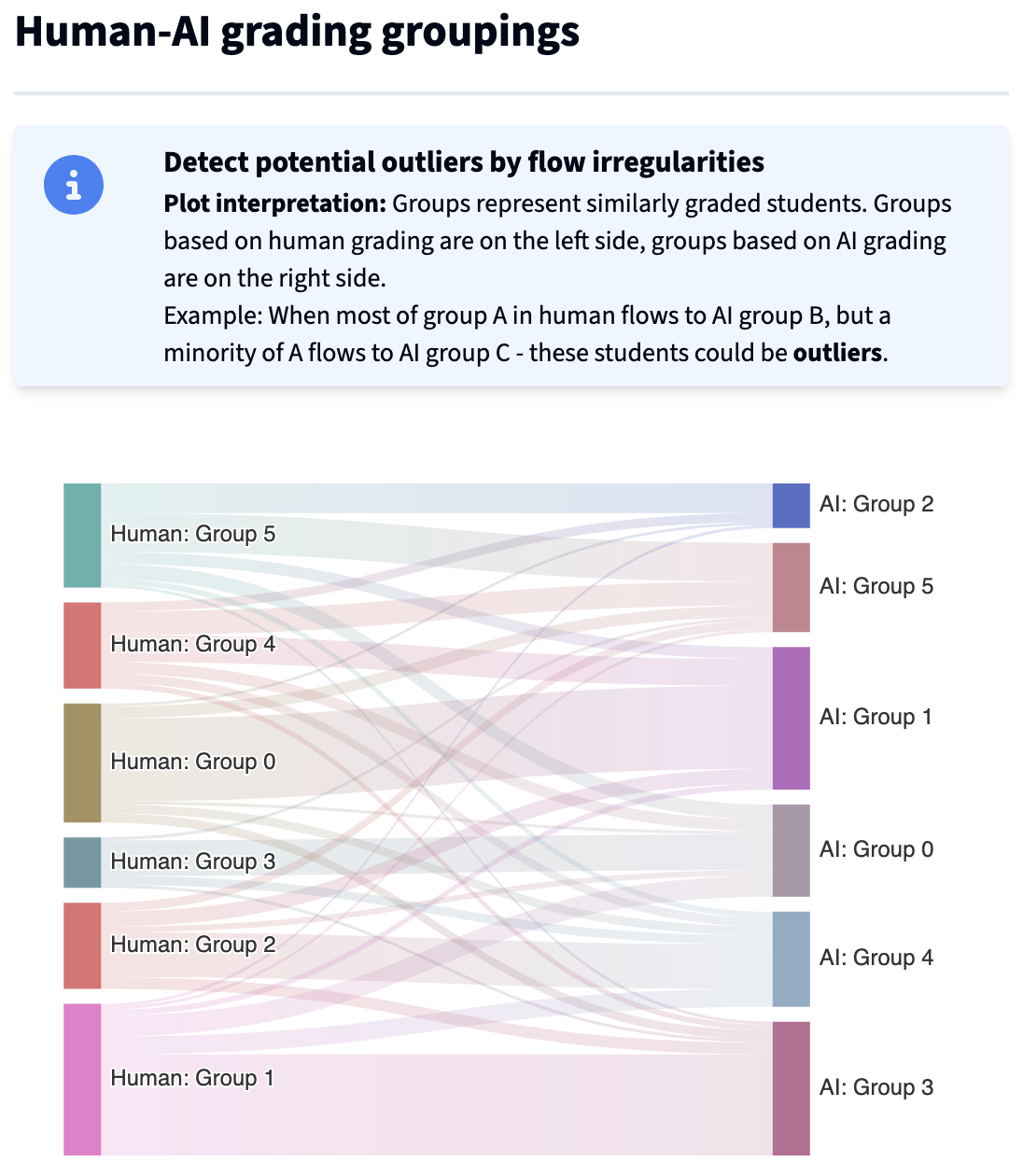

Our platform implements clustering visualization and outlier detection to help lecturers identify potential grading inconsistencies after the initial grading is complete. The system analyzes graded responses and presents them in a visual format that allows for quick identification of potential outliers or inconsistencies in grading patterns.

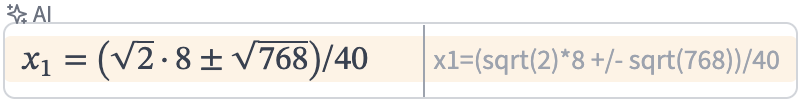

- Review table including outlier scores

-

The system analyzes graded responses through several integrated approaches. Clustering visualization groups similar responses visually, with human-assigned grades represented by colors, enabling quick identification of inconsistencies. The outlier detection system calculates scores based on the agreement between human grading and AI-suggested groupings, flagging responses that may need review. A comprehensive re-view interface provides sortable access to all graded responses, including assigned rubrics and computed outlier scores, while maintaining our commitment to preventing AI bias by never displaying AI-generated grades to human graders.

-

- Visualization of response clusters

- Visualization of the overlap between human-graded and AI-suggested grading