Navigation auf uzh.ch

Navigation auf uzh.ch

This qualitative study complements our survey findings through in-depth interviews with five faculty members at the University of Zurich. The interviews explored current grading practices, bias awareness, and perspectives on AI-assisted grading tools. While the small sample size limits generalizability, these conversations pro-vide valuable insights into the practical challenges and opportunities in assessment practices.

All participants stated that they work in teams to share the grading workload, at least for large exams. While only three out of five participants mentioned that they split up the grading exercise by exercise, the remaining two also expressed that they believe grading exercise by exercise is superior to grading student by student. The reason the other two participants usually conduct grading student by student is efficiency, as it avoids switching between different exams, and they would change their approach if this issue were mitigated. Three participants stated that they predefine and document clear criteria for awarding points (these criteria may be extended), while two participants indicated they do not use predefined criteria.

During the interviews, several biases affecting the grading process were identified by the participants. Two lecturers highlighted the occurrence of contrast effects, where the assessment of a student's work is influenced by the quality of preceding submissions. Another commonly noted bias was the halo effect, which can arise if the grader is familiar with the students or when grading is conducted student by student. Additionally, poor handwriting was cited as negatively impacting the scores awarded to students, reflecting a bias linked to the presentation of answers. Furthermore, it was mentioned that a grader's mood, whether good or bad, can influence grading outcomes, a phenomenon related to mental depletion.

To address these biases, participants suggested several strategies. One recommended measure is to grade exercise by exercise rather than student by student, which aligns with an effective grading workflow aimed at reducing halo effects. Shuffling the order of student submissions was also proposed as a way to mitigate contrast effects and prevent mood-related biases. Lastly, to counteract the impact of poor handwriting, it was suggested to explicitly inform students that legibility may affect their scores, encouraging clearer presentation of their answers.

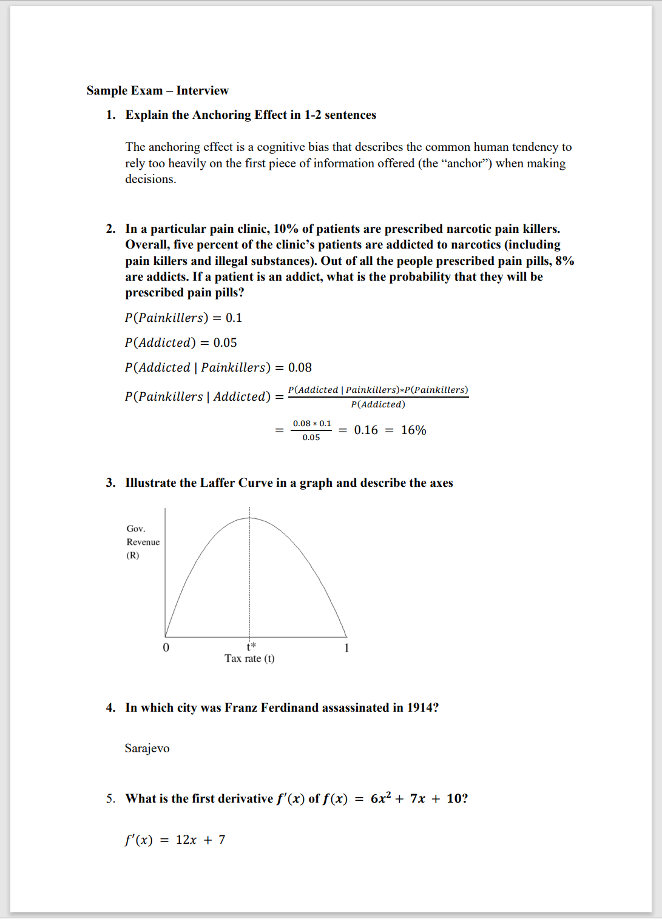

A text exercise (see Exercise 1) was shown to three participants. Two of them stated that they explicitly look for keywords when grading such an exercise, while one mentioned that he "knows the solution is correct when he sees it."

All five participants were shown a quantitative exercise (see Exercise 2). Three of them stated that they first check whether the result is correct. Of these three, two mentioned that when the result is correct, they simply verify that there is some form of derivation, whereas one stated that she examines the derivation in more detail. The two remaining participants indicated that they review the exercise from top to bottom, focusing on the derivation first.

Four out of five participants stated that they would definitely use such a tool. The remaining participant mentioned that he would be willing to use it, but only for large exams, with the break-even point estimated to be around 200 students. The workload associated with the digitization of exams is perceived as a problem, and the participants would consider it a significant mitigation if this part of the work could be outsourced to, for example, student assistants.

Sorting was perceived to be at least somewhat useful for quantitative exercises (see Exercise 2). While all participants stated that they would potentially use such a feature for quantitative exercises, two explicitly noted that the benefit was limited if they still had to review each exercise manually, and they would prefer the ability to automatically assign points to correct answers. Sorting based on keywords for text exercises (see Exercise 1) was perceived to be less useful. While all participants, except one, thought that Automated Grouping would be useful for the presented examples (see Exercises 4 and 5), none stated that exercises with such a low level of complexity occur in their exams. Four participants expressed a willingness to apply certain structural restrictions to their exams to reduce the grading workload.

One participant emphasized the importance of thoroughly checking the result, even if the software suggests it is correct (or incorrect). No explicit concerns were raised regarding automation, assuming it functions correctly. It appears that skepticism towards automated solutions is limited, provided that human intervention re-mains possible.

Here we present a list of feature ideas suggested by the participants in the interviews: