Navigation auf uzh.ch

Navigation auf uzh.ch

How do lecturers at the University of Zurich perceive the integration of automation in grading open-ended questions? In this section, we present a comprehensive summary of our online survey results, which explore the perspectives of educators on the potential of automated tools in enhancing the grading process of open-ended exam questions.

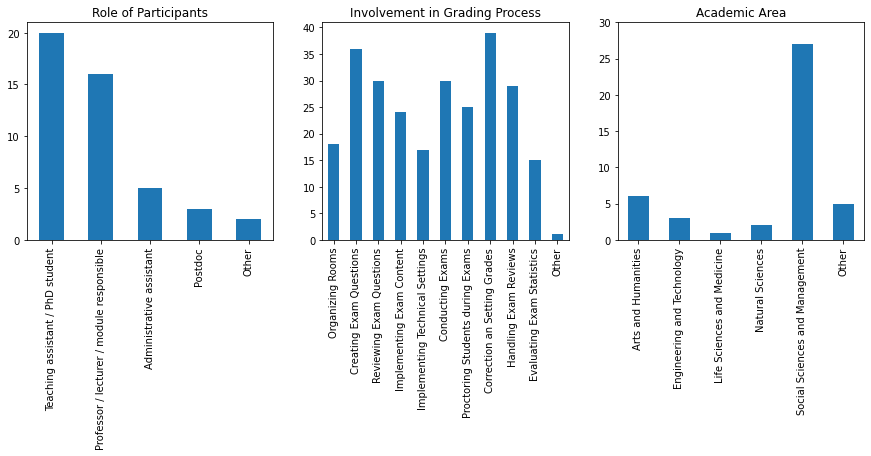

45 people completed our survey and provided valuable feedback. The following graphs summarize the roles of the participants, their involvement in the grading process, and the academic area in which they conduct their exams.

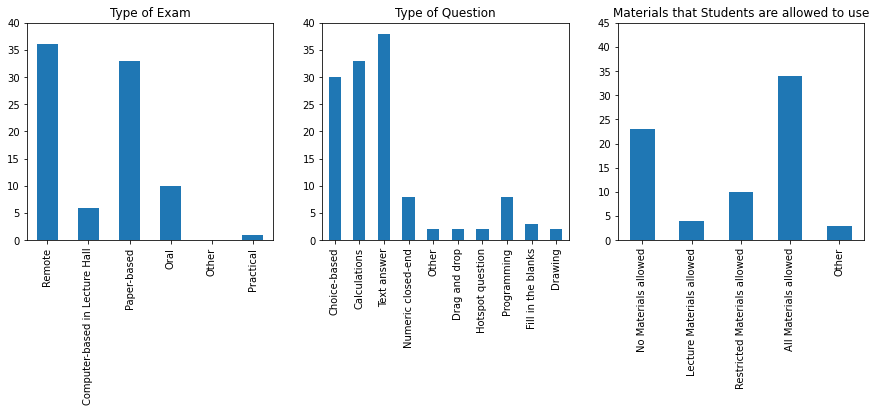

Exams at UZH differ in several aspects. The different aspects are indicated on the graphs below. Exams vary in setting (e.g., paper-based in the lecture hall), types of questions asked (e.g., multiple choice), and the student's material permitted (e.g., open-book).

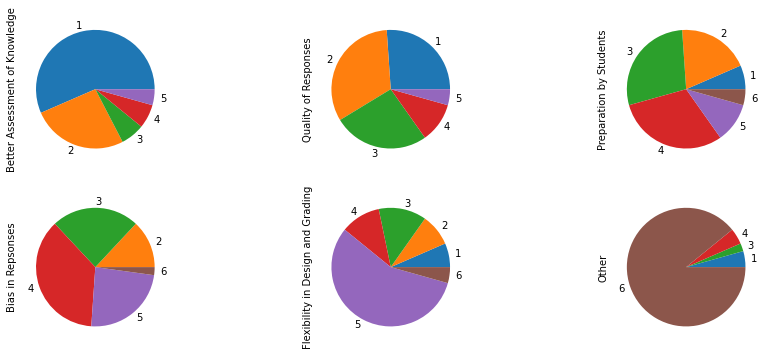

There are several reasons an examiner may include open-ended questions in an exam. One question in our survey investigated their main qualities.

In your opinion, what are the most beneficial factors of open-ended questions? Please rank the factors by assigning their relative rank compared to the other options.

According to the participants of our survey, the key advantage of open-ended questions is the ability to better assess the students' knowledge (e.g., covering more levels of Bloom's taxonomy; average ranking 1.76), fol-lowed by the improved quality of the students' answers (e.g. harder to guess, more detailed; average ranking 2.34). The remaining aspects are, on average, perceived to be considerably less beneficial. The mean and standard deviation of the ranking of all beneficial aspects can be found in the appendix.

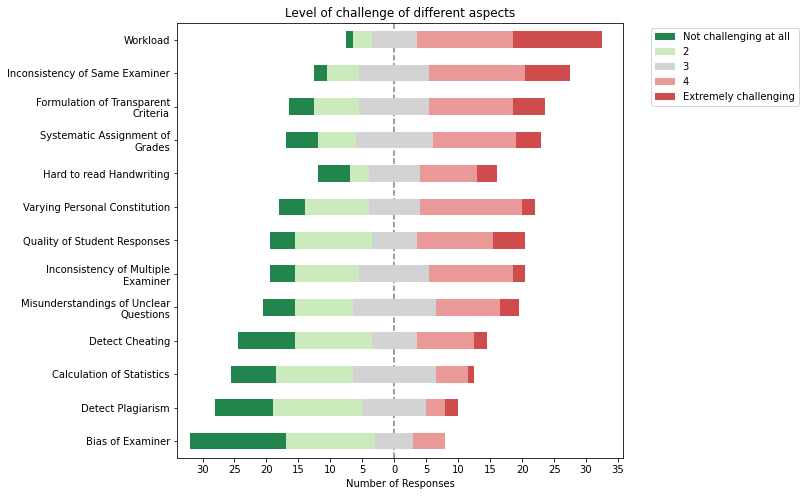

While open-ended questions provide numerous advantages, they also present challenges to examiners. One question focused on how challenging different aspects of grading open-ended questions are.

In your opinion, how challenging are these factors regarding the correction of open-ended exam questions? Please classify each of the following factors according to their level of challenge.

The participants perceived the considerable workload of grading open-ended questions as the greatest challenge (average rating 3.95/5.00), followed by the inconsistency of examiners (e.g. allocation of a different number of points for the "same" answer; average rating 3.50) and the definition of transparent grading criteria (3.20). Further aspects deemed relatively challenging were the systematic assignment of grades (3.13), potentially hard-to-read handwriting (3.07), varying personal constitution (3.05), the quality of the students' responses (3.05), the inconsistency of multiple examiners grading the same exam (2.96), and the misunderstanding of unclear questions (2.93). The remaining aspects of open-ended questions included in our survey were, on average, perceived to be less challenging. The mean and standard deviation of the rating of each challenge can be found in the appendix.

One question in our survey focused on whether our participants already used software to grade open-ended questions.

Have you previously used software with the specific goal of improving the grading process of open-ended questions? If yes, what software have you used? If no, do you think software could help you with the grading process? How?

Of the 27 participants who have explicitly stated whether they have used software for grading open-ended questions, 10 answered yes (i.e. 37%). Software listed by the participants includes Ans, SEB, EPIS, as well as a tool developed by the Teaching Center to grade Excel exams, and, in one case, even a participant's self-created tool. Of the people who have never used software, five explicitly stated that they think software would be useful (e.g. "Software might help in making a scheme of points to be reviewed when choosing the grade for an open question") and ten explicitly stated that they do not think there exists useful software that facilitates the grading of open-ended questions (e.g. “I would be surprised if there were a software that did a reasonably good job at this”).

The use of software could, at least partially, address some of the challenges arising from open-ended questions. One question in our survey focused on which features of a software would be helpful for the grading process.

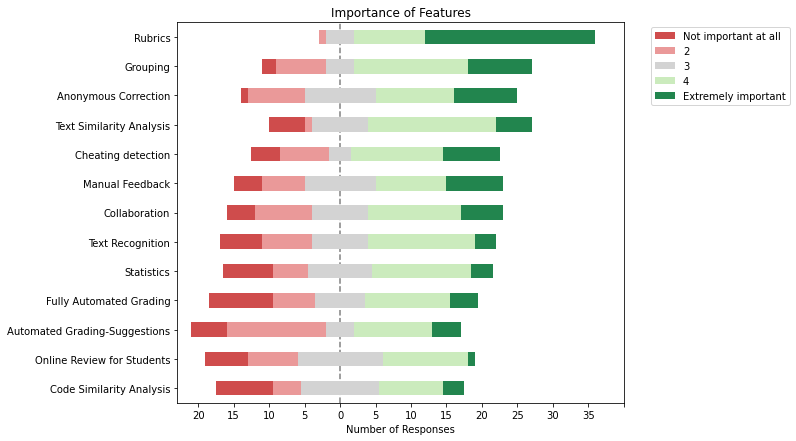

Please classify each of the following concepts according to their importance when focusing on the correction of open-ended exam questions.

The feature clearly considered to be the most important is the possibility to specify consistent correction criteria, a concept known as rubrics (average rating 4.46). Additional relevant features include the ability to automatically group similar answers (3.60), implement anonymous correction (3.48), use text similarity analysis (3.46) and cheating detection (3.40), as well as the option of writing manual feedback (3.31). The other features we included in our survey were, on average, perceived to be less important. The mean and standard deviation of the rating of each feature can be found in the appendix.

Our participants shared a lot of very helpful remarks with us, ranging from suggestions for additional re-sources (e.g., "I think it would be interesting to have a general document describing how are failing grades/grading curves used") to affirmation of the importance of open-ended questions (e.g., "Having been a student here myself, I think multiple choice questions provide bad incentives for learning; it would be much better if they were replaced with open-ended questions, for which automated tools could be very valuable"). Moreover, skepticism towards automated solution was widespread in the remarks (e.g., "I think that open-ended exam questions shouldn't be automated", "Apart from multiple choice, having computers try to grade exams is a waste of time and resources").